Overview

EduVerse Pro is born from the challenge of bridging the gap between abstract academic concepts and tangible understanding in higher education. Traditional lectures often fail to engage students with complex 3D subjects like anatomy or quantum physics, Leveraging AR, EduVerse Pro transforms lecture halls into interactive, collaborative spaces no VR budgets or headsets required. At its core, it addresses the UX problem of cognitive overload in learning, offering intuitive tools for professors and students to visualize, interact with, and retain complex content.

Problem Approach

Main Challenge

Professors: Time constraints, outdated tools, and declining student engagement.

Students: Difficulty visualizing spatial concepts, fragmented review materials.

Approach

User Interviews: Highlighted pain points like “too many clicks” and “lost track of fields.”

Competitor Analysis: Benchmarked against Existing Educational AR systems.

Desk Research

Key Pain Points:

Synthesis

User Persona

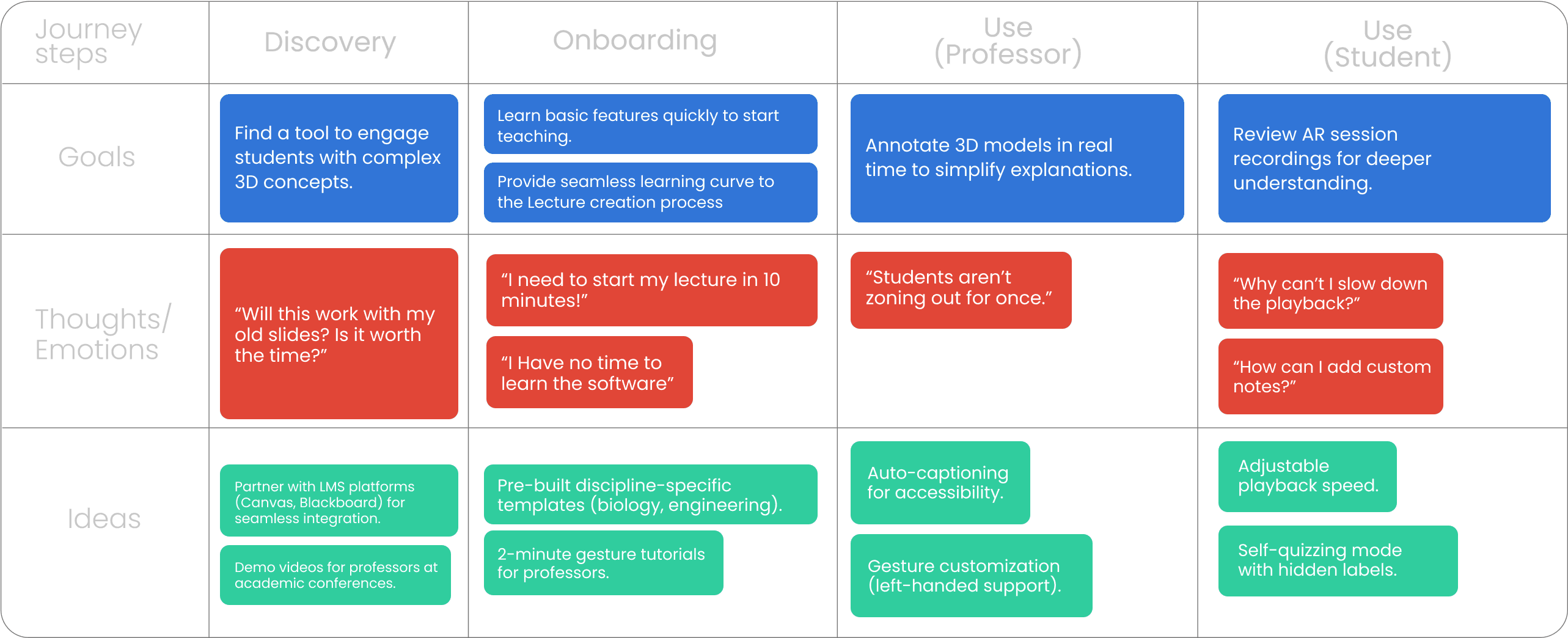

User Journey

3D objects and Notes system

Pre-made 3d object library

AR Session Recordings

Main Challenge

The Design system drew inspiration from VisionOS design principles, merging Apple’s spatial interaction frameworks with imaginative 3D environments. It established a visual foundation that balances functional minimalism

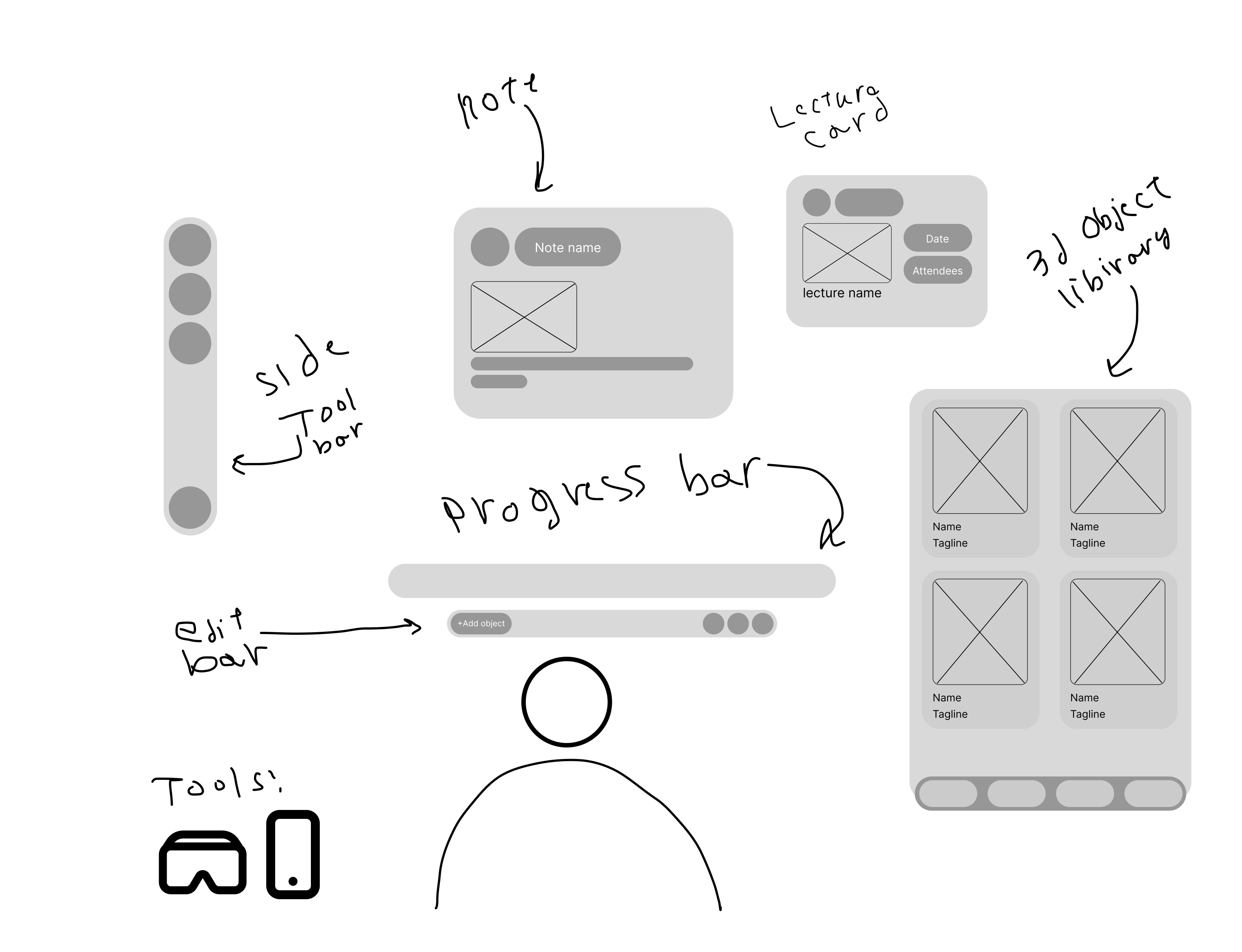

Lo-fi creations

The lo-fi phase of EduVerse Pro focused on rapidly testing core interactions while prioritizing spatial usability and minimizing cognitive load. Here’s how it shaped the final product:

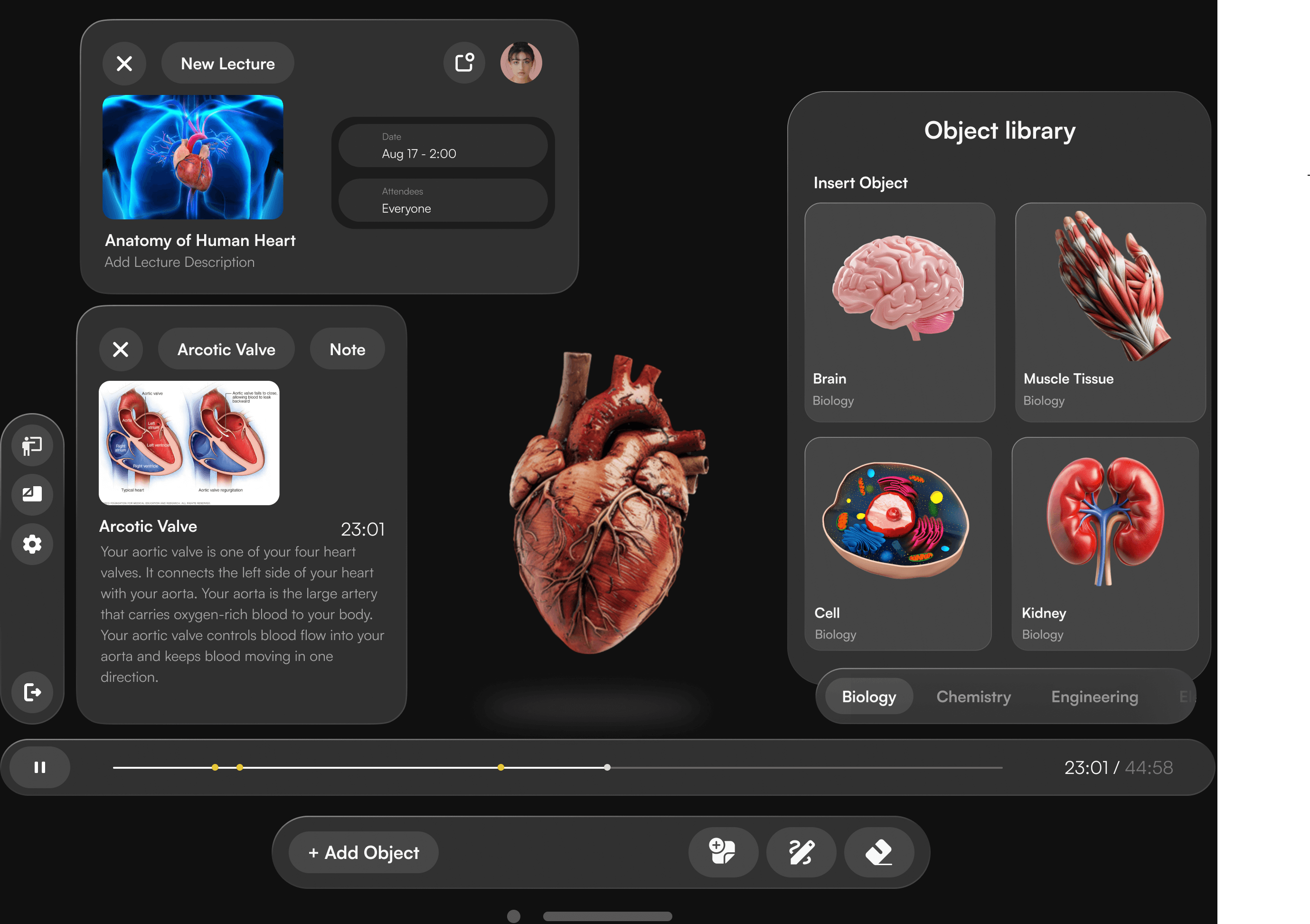

High Fidelty components

The hi-fi phase transformed validated lo-fi concepts into polished, immersive AR experiences, balancing educational rigor with intuitive interaction design. Here’s how EduVerse came to life:

Design System:

VisionOS-Inspired UI: Clean, depth-aware menus with dynamic shadows for spatial clarity.

Gesture Shortcuts:

Tap to annotate, swipe to share models to LMS, pinch to zoom.

Customizable toolbar positions (left/right-handed support).

Key Screens:

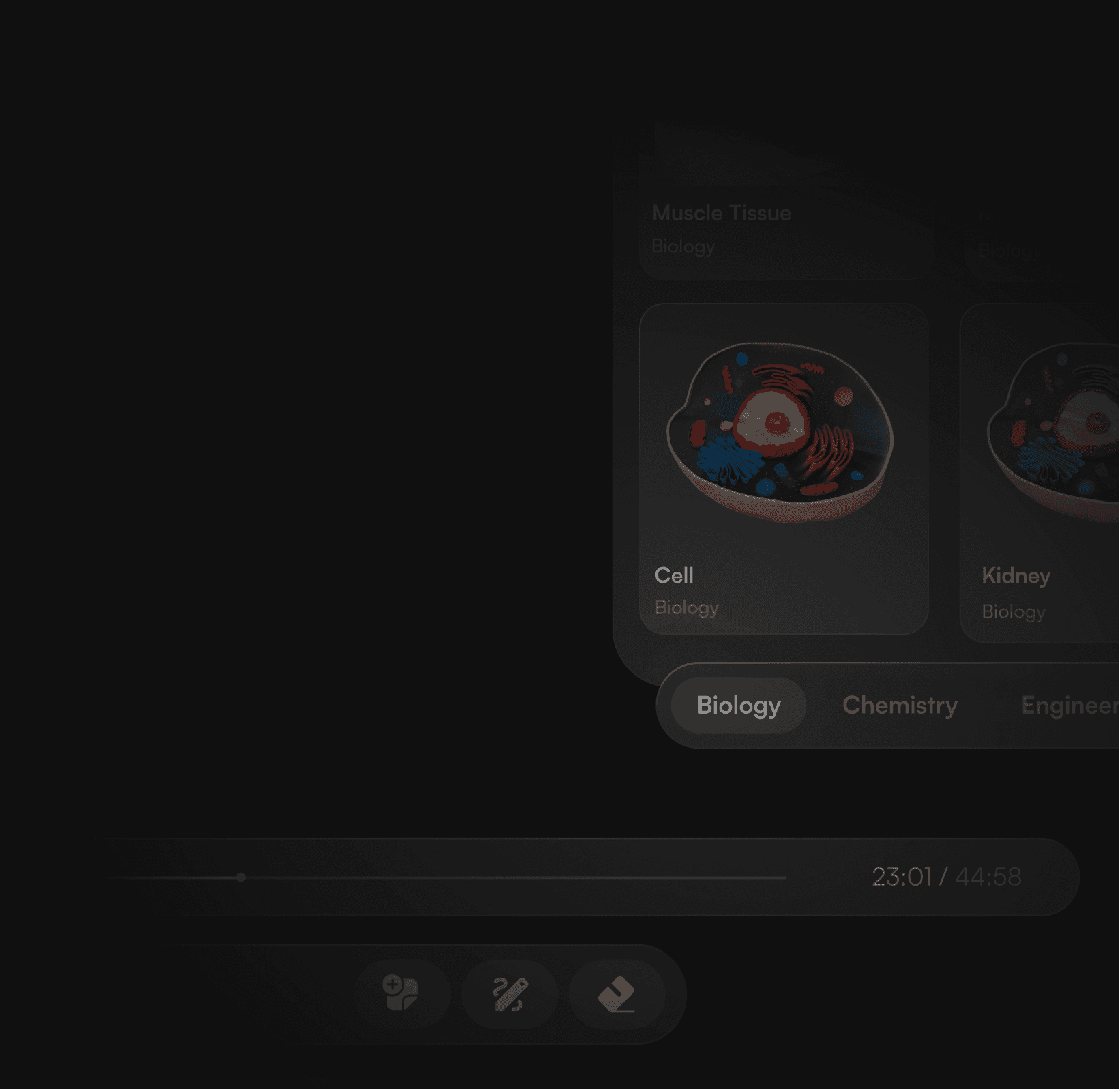

Model Library: Drag-and-drop 3D assets with auto-preview (e.g., rotating heart model).

Live Annotation Mode: Glowing ink effects for annotations (e.g., arteries pulse when highlighted).

Real-time sync: Students see annotations from their perspective.

High Fidelty components

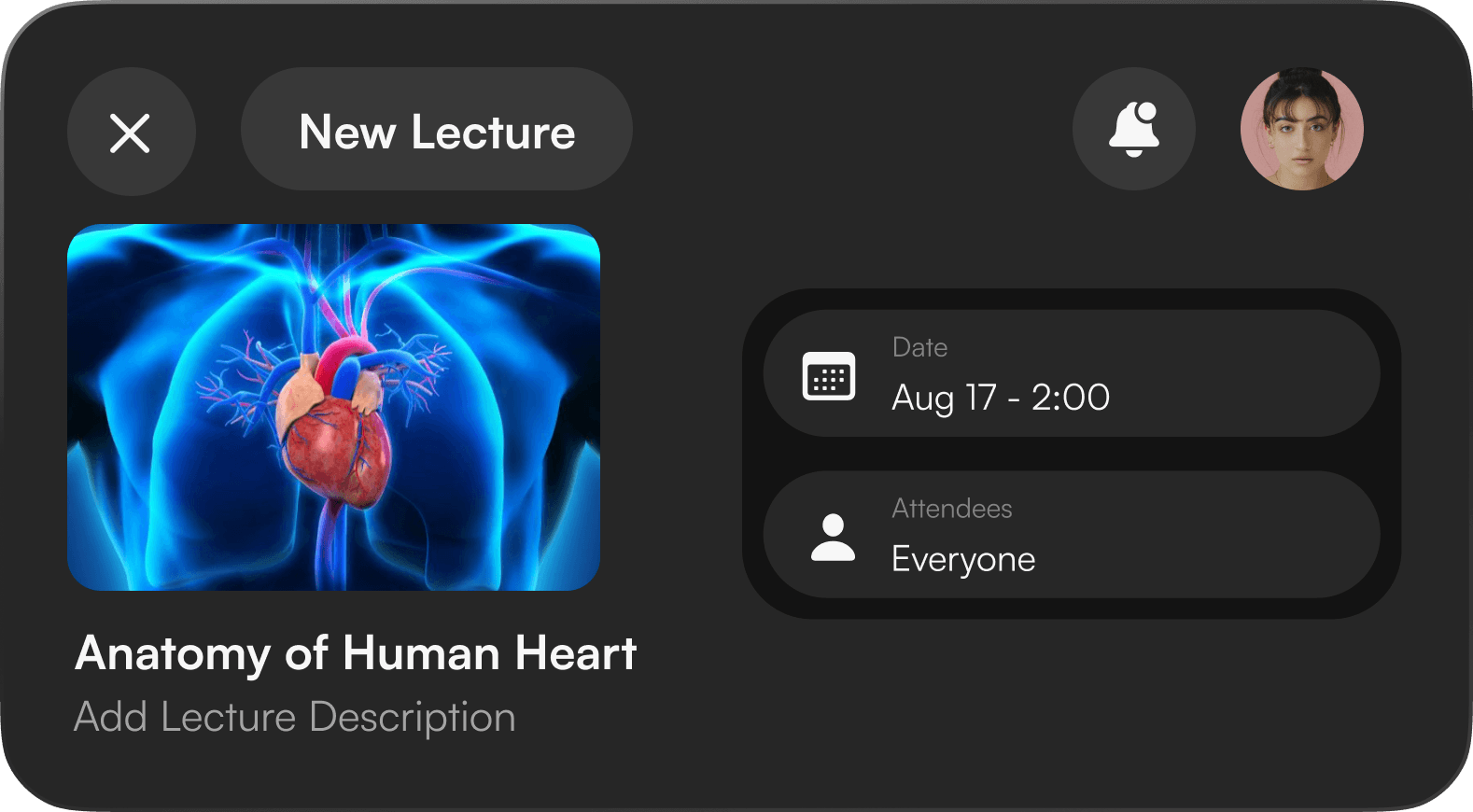

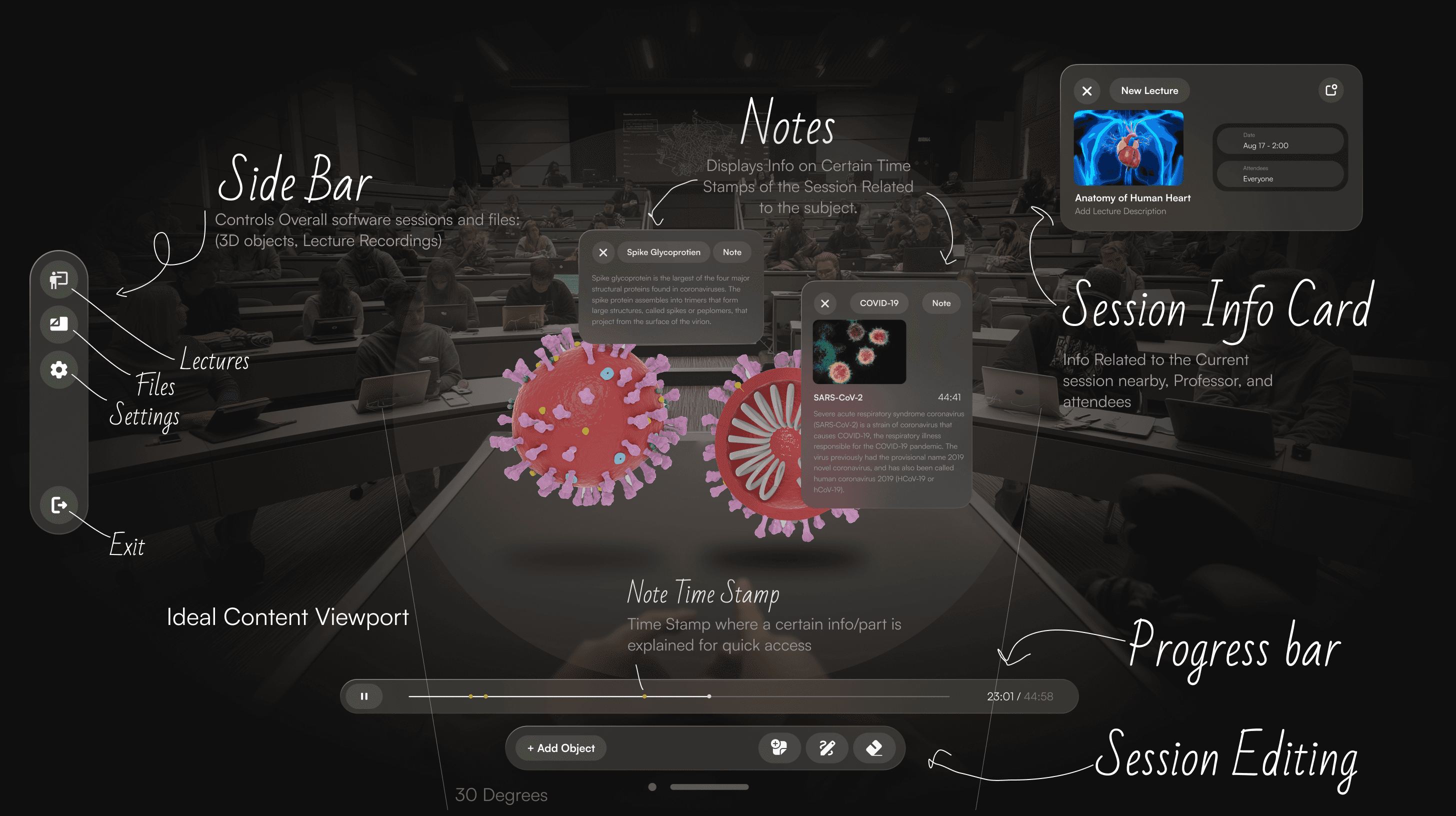

Professor session Example

Screen Anatomy Explained

Main Functions

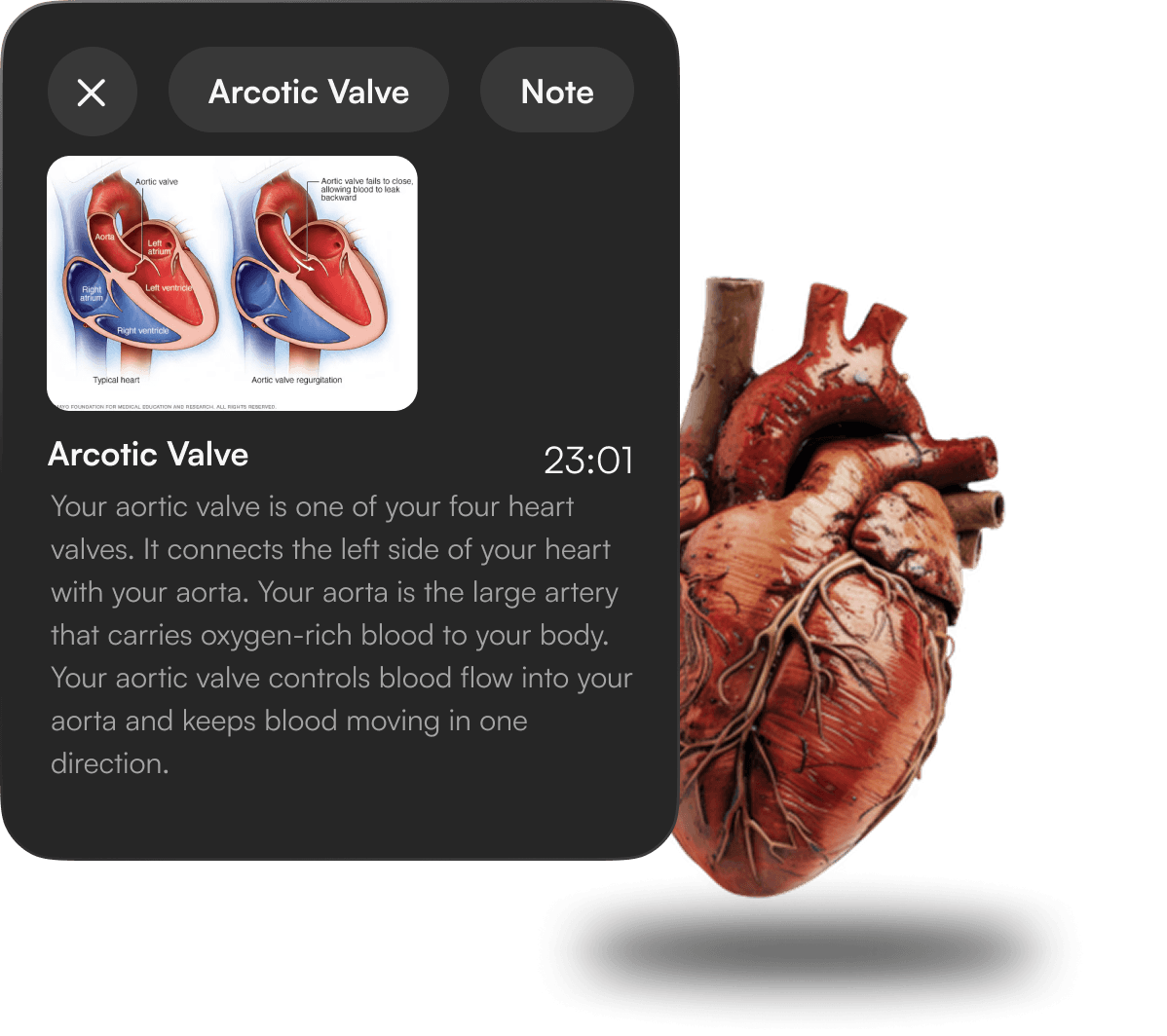

Floating Notes

What It Is:

Interactive AR annotations that users (professors/students) can place anywhere in 3D space during lectures or review sessions.

Purpose:

For Professors: Highlight key concepts (e.g., circling a ventricle) without blocking the 3D model.

For Students: Add contextual notes (text/voice) linked to specific parts of a lecture (e.g., “Remember: Purkinje fibers conduct impulses here”).

UX Rationale:

Reduces Cognitive Load: Notes stay anchored to the model, not the screen, mimicking real-world sticky notes.

Collaborative: Shared in real time during live sessions.

Lecture Info Card

What It Is:

A dynamic overlay displaying lecture metadata (title, professor, date) and quick actions.

Purpose:

At-a-Glance Context: Students verify they’re in the correct session (e.g., “Cardiac Anatomy – Dr. Lee – 10/15/24”).

Actions: Bookmark, share, or flag confusing moments.

UX Rationale:

Spatial Awareness: Positioned at the top edge of the AR view to avoid obscuring models.

Personalization: Auto-hides after 5 seconds of inactivity.

Edit Bar (Object/Highlight Tools)

What It Is:

A contextual toolbar for adding 3D objects, highlighting areas, or drawing on models.

Purpose:

For Professors: Insert pre-built assets (e.g., blood cells) or highlight structures during lectures.

For Students: Mark areas for later review (e.g., “Flag this valve dysfunction”).

UX Rationale:

Gesture Integration: Tools activate via pinch (select) + drag (place).

Focus Mode: Auto-fades when unused to prioritize the 3D workspace.

Mobile View (Students)

Notetaking

Attach voice/text notes to specific parts of AR models

Highlight & Mark

Highlight structures (glowing outlines) or flag areas for review.

Auto-syncs with notes for contextual studying.